Getting started

LLMAsAService.io is an LLM services layer that adds a variety of configurability, reliability, safety, and observability functions. This frees you up to concentrate on the prompts and AI features that matter to your customers, leaving the "non-functional requirements" to us.

How it works

From the developer perspective, there is one HTTP API that can be called to fulfill LLM text completion requests. You have other convenient options to call our service -

- We have a React hook and higher order component NPM library that abstracts much of the configuration away, leaving you with one hook constructor, and a send("This is the prompt"); call.

- A prebuilt Multi-turn streaming chat component NPM library that makes including text completion conversations and agents a snap.

Calls to our service api (chat.llmasaservice.io) perform all of the safety, failover, caching, PII redaction, prompt templates, logging, retry, etc. magic. How these features are implemented is defined in the app.llmasaservice.io control panel. See the Administrators topics for detailed help.

Tutorial - making your first call

This example shows how simple it is to have a streaming response answer a prompt on a page in a react or next.js application (anything that support React hooks).

From within you next.js or react application, install our client package

npm i llmasaservice-client

In the component you want to use LLM text completion features, import the hook, destruct the hook methods, and make calls to send.

import { useLLM } from 'useLLM';

...

const {send, response, idle} = useLLM({project_id: "[your LLMAsAService project id]"}); // get the project_id from the Embed page in the control panel

...

const handleChatClick = () => {

send("What is 1+1="); // calls the LLMs for a streaming response

};

// the response is streamed back and can be shown where needed

return (

<div>

<button onClick={handleChatClick} disabled={!idle}>

Call Chat

</button>

<div>{response}</div>

</div>);

Although this code is short and concise, it has a lot of code on our side that you don't have to worry about. Failover, system instruction injection, PII redaction and blocking, caching, logging, and the list goes on. Our goal is to let you focus on your application features not the LLM plumbing.

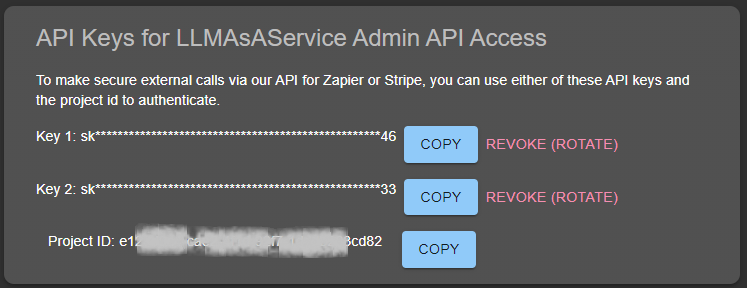

Your unique Project ID

Your project Id is the central identifier that links your hook to your LLMAsAService project configuration. To retrieve it, navigate to the Integration section in the control panel, and use the COPY button to get it on your clipboard.

The API Keys Key 1 and Key 2 are for public api access to manage customers and CRM workflows. You don't need these keys for making calls to the chat service.